Plotting IR data in Python

What’s the best way to learn any new language, be it in programming or not?

Right, applying it!

So in here, we are going to look at an example with actual real-world utility, by far my favorite kind of examples. The level of this is task is somewhere between the beginner and intermediate stage. If you just started out with python, you will probably still be able to follow the explanations, and if you are already a bit further, I invite you to try and solve the problem yourself before you read the solution.

The Problem

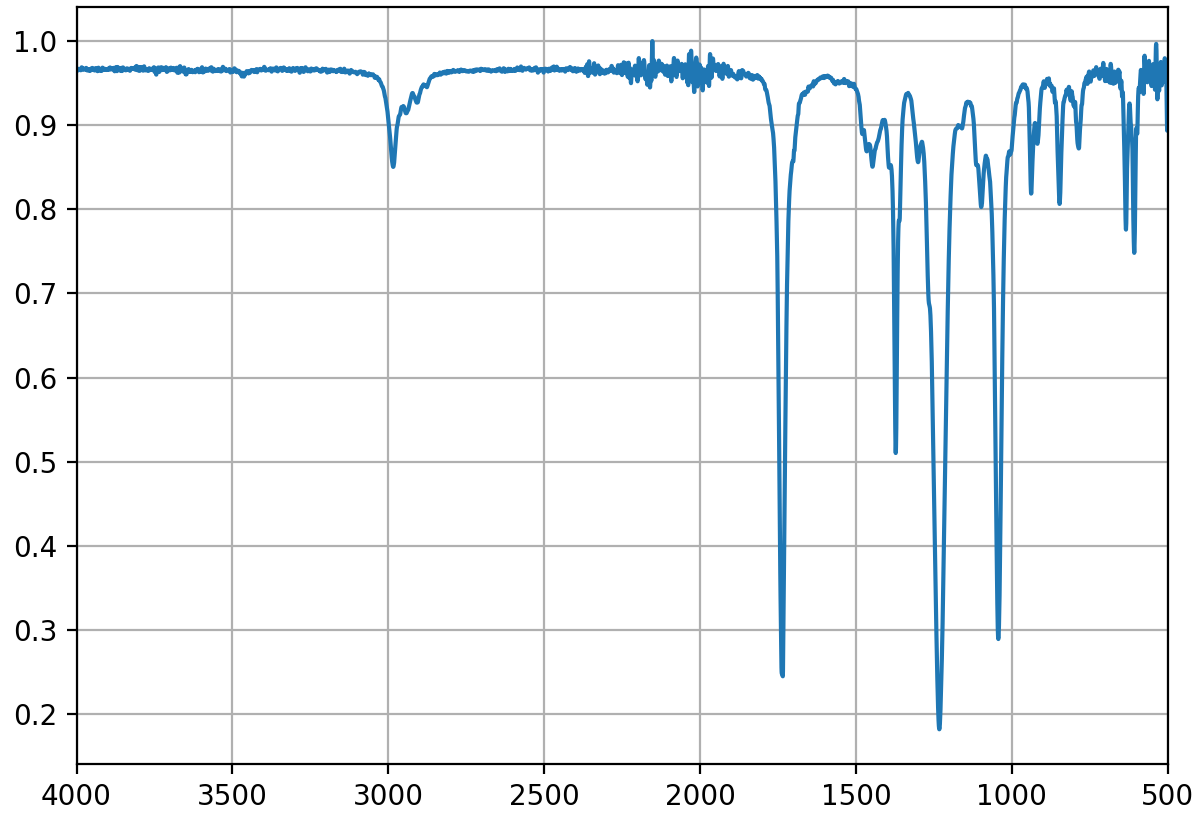

Plotting instrument data is a frequent problem in many disciplines. At the point I wrote this script, my particular problem was to plot data generated by an infrared (IR) spectrometer. Conveniently, the data was in CSV format, which is generally quite accessible. Now there are other ways to solve this problem that don’t require coding. For one, I could have used Origin, even Excel, but at the drawback of having to run through a more or less manual process for every spectrum. I preferred to write my own little tool, that plots as many spectra as I need at once, without interaction, and in publication quality.

The Code

(The full code and a sample data file can be downloaded from GitHub)

So how do we go about this? First, let’s take a look at the data and see what we have to deal with. Fortunately, it is structured in a straightforward and constant fashion. The CSV file for every spectrum contains two columns, one with the wavenumber (in cm-1) and the other with the transmission (in arbitrary units) at that point. As a little complication, there are some rows with metadata (e.g. measurement parameters) both before and after the actual data. From this observation, step 1 on our route is rather clear: Load the CSV data into python and remove the unnecessary metadata.

So let’s go for it!

Step 1: Load the data

To load the data, we will use Pandas, a useful Python package used ubiquitously in data science.

It will provide us with a DataFrame structure, that we use to hold and manipulate the spectral data.

In addition, it facilitates file I/O quite a bit as it runs the annoying bits of opening, streaming, and closing the file under its hood.

import pandas as pd

filename = 'sample_data.csv' # the sample file has to be in the same directory as this script

# read all data from file into DataFrame xydata

xydata = pd.read_csv(filename, names=["X", "Y"])

# find the last row of the first batch of metadata

data_start = xydata.index[xydata["X"] == "XYDATA"].item()

# find the first row of the second batch of metadata

data_end = xydata.index[xydata["X"] == "##### Extended Information"].item()

# slice the DataFrame so that the metadata is discarded

xydata = xydata.iloc[data_start + 1: data_end, :]

# reset indices of the new DataFrame

xydata.reset_index(inplace=True, drop=True)

# ensure correct datatype

xydata = xydata.astype("float64")

Let’s go through this line by line.

After importing the pandas package, we define a variable to hold the filename.

It is usually more convenient to define things like this visibly, instead of hiding the filename in the following read_csv() function.

read_csv() takes care of loading the data.

If you followed the file I/O tutorial, you will notice that we don’t use the with open(...) statement this time.

This is because pandas handles the file internally and reduces the operation to the read_csv() function.

For our convenience, we pass the optional argument names to the function.

In the resulting DataFrame, this list will be used to name the columns.

Once the data is loaded, we want to remove the metadata section. We could do this in a hardcoded fashion, i.e. configure the script to cut off the first 19 rows and the last 40 rows. You will immediately see that this is not an ideal way to handle metadata. If you don’t have absolutely clear specifications from the instrument manufacturer that your data will always be shaped exactly this way, then this is certainly a bad idea. What’s the alternative? Usually you will find something that is constant across all your data. In our special case, the data is always preceded by a line “XYDATA” and followed by a line “##### Extended Information”. That makes it simple for us to determine the position of the data independent of the number of rows it spans.

To obtain the index where column X has the value XYDATA, we use the DataFrame.index attribute.

We then directly apply the .item() method to obtain the first (and in this case, only) element in the index.

This last one might be not immediately intuitive, but it is necessary because the DataFrame.index attribute does not return a plain index number or list of index numbers but rather an “Index” object.

While this has some uses on its own, we don’t need it here and just get the item from it.

We have now obtained the exact location of the two metadata portions without hardcoding their positions.

What remains is to slice xydata using its .iloc property.

The brackets behind .iloc let us indicate which slice of the DataFrame we want.

First, we put the row indices which we just determined.

Then, after the comma, we use : as placeholder to indicate we want ALL columns.

The last two commands contain some cleanup operations.

Resetting the index of the DataFrame is not strictly necessary in our case, but it is often more intuitive if the DataFrame starts at index 0, not 20.

Finally, we want to ensure our data has a numeric datatype like float64.

We need to do this because when pandas read our data in the first place, it has inferred data types for every column.

And since in the metadata part, there has been text in both columns, pandas has initially assigned the object datatype (similar to python’s built-in string datatype for text).

Fortunately, this is easy to change by calling the DataFrame.astype() method.

This already concludes the first part of our work, the data is now read in and stored in a DataFrame. What’s next?

Step 2: Processing

Our IR data needs only very simple processing. Actually, I only want to do one thing here, which is scaling the data to a maximum transmission of 1. You could of course do arbitrarily complicated things here (baseline correction, peak picking,…), but for the purpose of quickly plotting spectra, this normalization will do.

xydata["Y"] = xydata["Y"] / xydata["Y"].max()

This one is pretty simple as the mathematical operation is applied element-wise to the entire column of the DataFrame.

Step 3: Plotting

The central part of this program is likely the hardest to set up as a beginner.

To plot, we employ matplotlib, which is a comprehensive library that will more or less plot anything.

Its complexity can render it a bit inaccessible to beginners (who am I kidding…I still consider it rather inaccessible).

However, this is THE standard package for plotting in Python.

There are of course other packages available, but a fair share of them just throw a fancy cover over matplotlib that still runs under the hood.

Conveniently, what we need to plot is quite simple so let’s take a look at the code:

import matplotlib.pyplot as plt

# initialize a figure with one axis

fig, axs = plt.subplots(1, 1)

# plot the data

axs.plot(xydata["X"], xydata["Y"])

# cut the plot

axs.set_xlim(4000, 500)

axs.set_ylim(None, 1.05)

# set informative labels

axs.set_xlabel('wavenumber [$\mathregular{cm^{-1}]}$') # matplotlib can interpret this LateX expression

axs.set_ylabel('transmission [a.u.]')

axs.grid(True) # show a grid to help guide the viewer's eye

axs.set_title(filename) # set a title from the filename of the input data

fig.tight_layout() # rearrange everything to make sure it fits the canvas

plt.show()

This code might seem much, but even if you have not used matplotlib before, you can guess the purpose of most commands easily. I want to focus on the few more obscure.

Using plt.subplots(1, 1), we initialize our plot.

The numbers specify that we want one row and one column (thus obtaining one axes).

Each figure has at least one axes, but it could have multiple if we had chosen those values differently.

“Why do we need this?” you ask.

Well, often you might want to place different plots in one figure or just add a colorbar or something like that.

Multiple axes per figure allow you to do that.

Since you just learned what we need different axes for, it should now be logical that .plot(x, y) is a method of axs, not fig.

Next, the axs.set_...() and axs.grid() commands do exactly what their names suggest. With fig.tight_layout(), we specify a formatting parameter.

This is optional but ensures that everything we just prepared for plotting fits into the figure.

And finally, with plt.show(), we display our figure.

Nice, ain’t it?

Go ahead and try this minimal script with the sample_data.csv file provided in the GitHub repository.

Make sure to place the sample file in the same directory that your script is executed in.

Bonus Task: Wrap it into a command line tool

Now what we have so far is nice, although not quite convenient enough.

What I was looking for when writing this was a tool that would just give me spectra or cutouts of them at the press of a button.

I basically want to open a terminal, type a command and one or more filenames and – tadaaa – receive plots with all spectra.

Something like this, where -x or -y flags would define the spectral region to be plotted:

$ plotir sample_data.csv -x 1800 1550

Again, if you have basic familiarity with Python and the command line, I advise you try this for yourself before looking into my solution.

Our required action is threefold.

First, we need to link the command plotir to our script.

One way to do this is to generate a symbolic link, located in a directory which is in PATH and that links to our script IRvisualizer.py (which can be anywhere on the system).

In addition, our script now needs to tell the command line that it is indeed a python script and wants to be invoked by a python interpreter.

On Unix systems, we can do this by providing a shebang as the first line of our script:

#!/usr/bin/env python

Sadly, on Windows the process is more complicated.

Second step: We need to make the script suitable for processing a batch of spectra. This is easier if we divide the script into functions. Here’s the code we developed above, but re-written as functions.

def import_irdata_from_csv(filename):

"""

Read csv and identify start and end of dataset by "XYDATA" and "##### Extended Information" keywords

"""

xydata = pd.read_csv(filename, names=["X", "Y"])

data_start = xydata.index[xydata["X"] == "XYDATA"].item()

data_end = xydata.index[xydata["X"] == "##### Extended Information"].item()

xydata = xydata.iloc[data_start + 1: data_end, :]

xydata.reset_index(inplace=True, drop=True)

xydata = xydata.astype("float64")

return xydata

def normalize_y(df):

"""

Normalize the Y-values of pandas.DataFrame to a maximum of 1

"""

df["Y"] = df["Y"] / df["Y"].max()

return df

def plot_spectrum(df, filename, xlim_start, xlim_end, ylim_start, ylim_end):

"""

Plot an IR spectrum from a pandas.DataFrame with "X" and "Y" columns

"""

fig, axs = plt.subplots(1, 1) # initialize a figure with one axis (since 1 row, 1 column)

axs.plot(df["X"], df["Y"]) # plot the data

# cut the plot according to user input

axs.set_xlim(xlim_start, xlim_end)

axs.set_ylim(ylim_start, ylim_end)

# set informative labels

axs.set_xlabel('wavenumber [$\mathregular{cm^{-1}]}$') # matplotlib can interpret this LateX expression

axs.set_ylabel('transmission [a.u.]')

axs.grid(True) # show a grid to help guide the viewer's eye

axs.set_title(filename) # set a title from the filename of the input data

fig.tight_layout() # a standard option for matplotlib

plt.draw()

return

All steps of our script are now in isolated reusable blocks and if we had a number of spectra to process, we could just do something like this in the main part:

x_start = 4000 # default x-limit

x_end = 500 # default x-limit

y_start = None # default y-limit

y_end = None # default y-limit

spectra_list = ["A.csv", "B.csv", "C.csv"]

for spectrum in spectra_list:

df = import_irdata_from_csv(spectrum)

df = normalize_y(df)

plot_spectrum(df, spectrum, x_start, x_end, y_start, y_end)

Now for the third and last step, we want to enable the user to specify parameters directly when invoking the script.

Right now we still have hardcoded variables like x_start = 4000 in our script.

Of course, it would be inconvenient to have to change the code, whenever we want a cutout of the spectra, say, to inspect a carbonyl vibration peak more closely.

Much rather would we have the hardcoded values above as the default, but let the user overwrite them from the outside.

For that, we will need to get the list of parameters (x_start … spectra_list) from the terminal instead of the hardcoded variables.

To define this interaction with the world outside of python, there are some built-in modules, and we are going to use the simplest to do the job, sys.

sys can do a wide range of system-specific things, and we employ sys.argv which is simply the list of command line arguments passed to your script.

If you remember how we planned to invoke the script:

$ plotir sample_data.csv -x 1800 1550

sys.argv will take this entire command, split at the spaces and save the elements as a list.

So sys.argv[0] will be the name of the script,plotir, whereas sys.argv[4] is 1550 in this case.

In our python code we therefore want to check for -x or -y flags and, if we find either, assign the consecutive values to the appropriate variables.

We put this at the start of our program:

import sys

# deal with the arguments passed from command line

arguments = sys.argv # create a copy of sys.argv (since arguments will be modified)

if len(arguments) == 1:

sys.exit("ERROR: No argument given. Please give at least one csv file with IR data.")

print(arguments, "\n")

# check if -x and -y flags were given

for i in range(0, len(arguments)):

if "-x" in arguments[i]:

x_start = int(arguments[i+1]) # the argument after "-x"

x_end = int(arguments[i+2]) # the 2nd argument after "-x"

j = i # save the index of "-x" to j

if "-y" in arguments[i]: # same as for "-x"

y_start = float(arguments[i+1])

y_end = float(arguments[i+2])

k = i

# if the -x and/or -y flags were given, delete them and the following two values from sys.argv.

# This is why we saved the indices into j and k.

if j:

del arguments[j:j + 3] # delete "-x" and the two items after this

if k:

del arguments[k - 3:k] # as above, but -3 for the 3 items that have already been deleted

elif k:

del arguments[k:k + 3]

Note: If we want to process more than a few command line arguments,

argparseor other high-level parsers will be a more suitable choice than `sys.argv

What’s left in the list of arguments now? Yes, the filenames of CSVs containing spectra. And that can be any number of spectra! However many the user passes, they will all be plotted. Thanks to the functions we defined above, that will be easy.

# Process spectra in batch

importedData = {}

normalizedData = {}

# iterate all filenames given when this script was called

for i in range(1, len(arguments)): # start at 1 since arguments[0] is just the name of the script

file = arguments[i] # for convenience

if ".csv" not in file: # exclude non-CSV files

print("WARNING: Encountered invalid file type for {} (only .csv allowed). Skipping this file.".format(file))

elif "_normalized.csv" in file: # exclude previously normalized files

print("Skipping previously processed file {}".format(file))

else: # process everything else

# import

importedData[file] = import_irdata_from_csv(file)

# normalize Y values of df

normalizedData[file] = normalize_y(importedData[file])

# plot the spectra

plot_spectrum(normalizedData[file], file, x_start, x_end, y_start, y_end)

# save normalized data to csv

newfile = file.strip(".csv")+"_normalized.csv"

normalizedData[file].to_csv(newfile, header=False, index=False)

plt.show() # ensure all plots stay open

And that’s it! You have now seen how to plot an IR spectrum in python and call your python script from a terminal, where you can allow the user to pass his custom parameters to quickly plot spectra.

Great that you made it until here!

You can find the full python code with a slightly more verbose output on my GitHub.